RealFusion: 360° Reconstruction of Any Object from a Single Image

Abstract

We consider the problem of reconstructing a full 360° photographic model of an object from a single image of it. We do so by fitting a neural radiance field to the image, but find this problem to be severely ill-posed. We thus take an off-the-self conditional image generator based on diffusion and engineer a prompt that encourages it to ``dream up'' novel views of the object. Using an approach inspired by DreamFields and DreamFusion, we fuse the given input view, the conditional prior, and other regularizers in a final, consistent reconstruction. We demonstrate state-of-the-art reconstruction results on benchmark images when compared to prior methods for monocular 3D reconstruction of objects. Qualitatively, our reconstructions provide a faithful match of the input view and a plausible extrapolation of its appearance and 3D shape, including to the side of the object not visible in the image.

Examples

Examples. RealFusion reconstructions from a single input view.

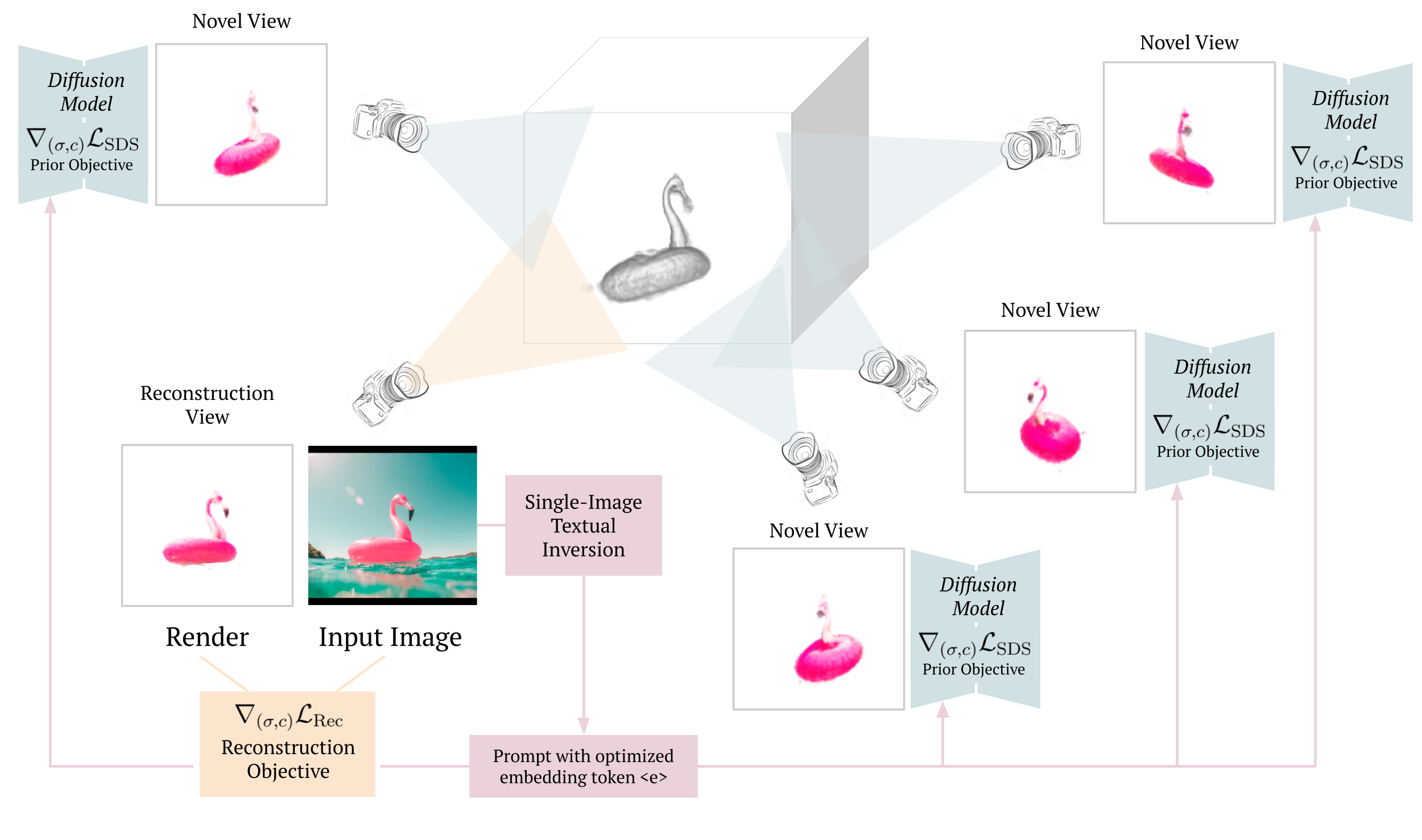

Diagram

Method diagram. Our method optimizes a neural radiance field using two objectives simultaneously: a reconstruction objective and a prior objective. The reconstruction objective ensures that the radiance field resembles the input image from a specific, fixed view. The prior objective uses a large pre-trained diffusion model to ensure that the radiance field looks like the given object from randomly sampled novel viewpoints. The key to making this process work well is to condition the diffusion model on a prompt with a custom token <e>, which is generated prior to reconstruction using single-image textual inversion.

Citation

@inproceedings{

melaskyriazi2023realfusion,

title={RealFusion: 360° Reconstruction of Any Object from a Single Image}

author={Luke Melas-Kyriazi and Christian Rupprecht and Iro Laina and Andrea Vedaldi}

year={2023}

booktitle={Arxiv}

}Acknowledgements

L.M.K. is supported by the Rhodes Trust. A.V. and C.R. are supported by ERC-UNION-CoG-101001212. C.R. is also supported by VisualAI EP/T028572/1.