PC2: Projection-Conditioned Point Cloud Diffusion for Single-Image 3D Reconstruction

Abstract

Reconstructing the 3D shape of an object from a single RGB image is a long-standing and highly challenging problem in computer vision. In this paper, we propose a novel method for single-image 3D reconstruction which generates a sparse point cloud via a conditional denoising diffusion process. Our method takes as input a single RGB image along with its camera pose and gradually denoises a set of 3D points, whose positions are initially sampled randomly from a three-dimensional Gaussian distribution, into the shape of an object. The key to our method is a geometrically-consistent conditioning process which we call projection conditioning: at each step in the diffusion process, we project local image features onto the partially-denoised point cloud from the given camera pose. This projection conditioning process enables us to generate high-resolution sparse geometries that are well-aligned with the input image, and can additionally be used to predict point colors after shape reconstruction. Moreover, due to the probabilistic nature of the diffusion process, our method is naturally capable of generating multiple different shapes consistent with a single input image. In contrast to prior work, our approach not only performs well on synthetic benchmarks, but also gives large qualitative improvements on complex real-world data.

Examples

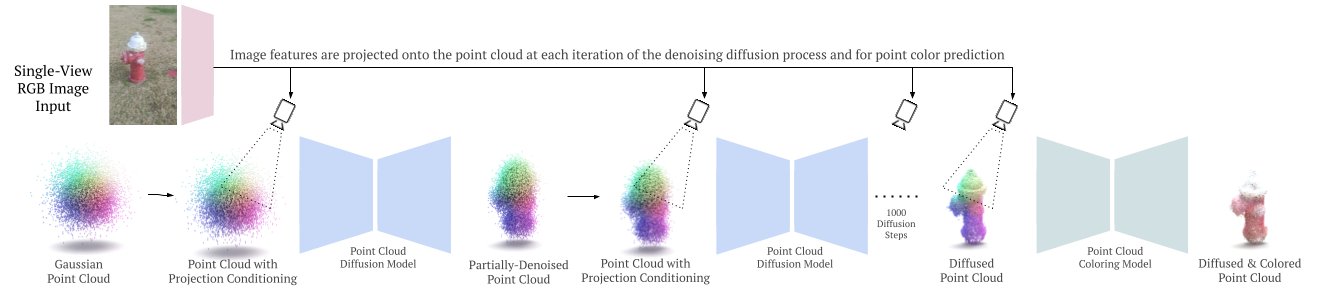

Method Diagram. PC2 reconstructs a colored point cloud from a single input image along with its camera pose. The method contains two sub-parts, both of which utilize our model projection conditioning method. First, we gradually denoise a set of points into the shape of an object. At each step in the diffusion process, we project image features onto the partially-denoised point cloud from the given camera pose, augmenting each point with a set of neural features. This step makes the diffusion process conditional on the image in a geometrically-consistent manner, enabling high-quality shape reconstruction. Second, we predict the color of each point using a model based on the same projection procedure. Hi

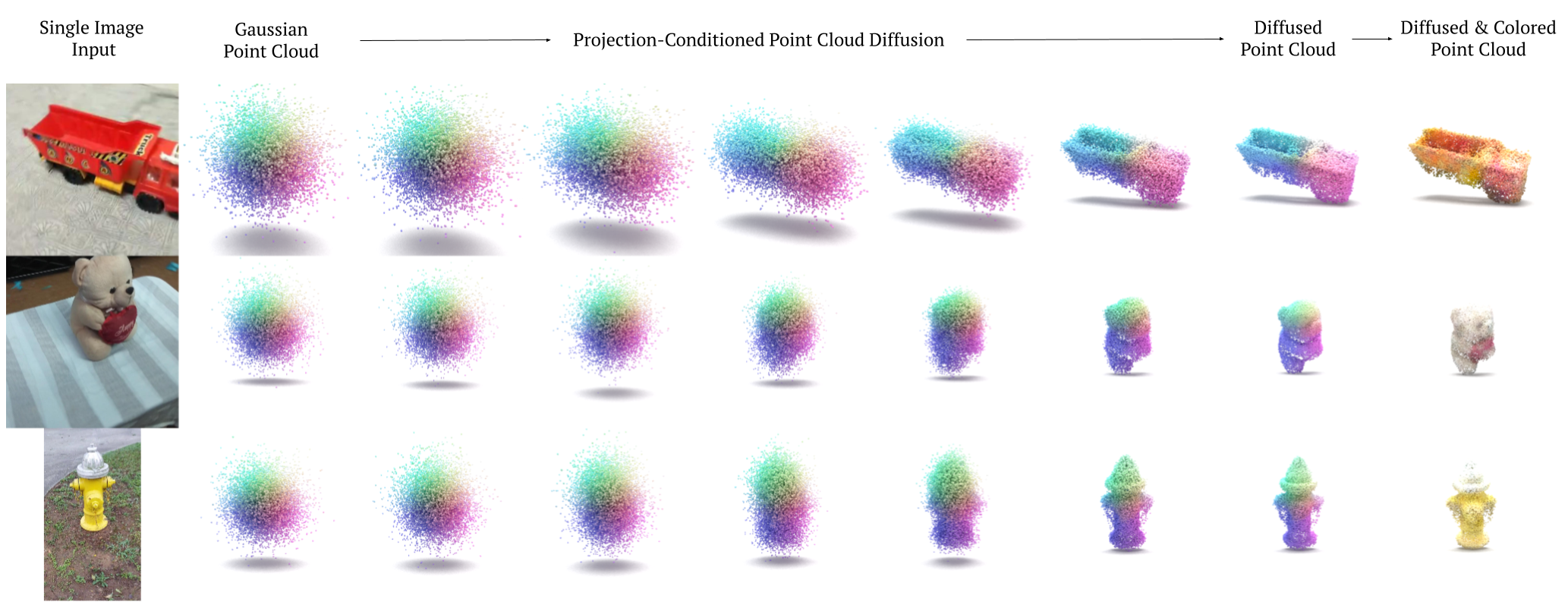

PC2 performs single-image 3D point cloud reconstruction by gradually diffusing an initially random point cloud to align the with input image. It has been trained through simple, sparse COLMAP supervision from videos.

Examples on three real-world categories from Co3D: toytrucks, teddy bears, and hydrants.

Citation

@inproceedings{

melaskyriazi2023projection,

title={PC2: Projection-Conditioned Point Cloud Diffusion for Single-Image 3D Reconstruction}

author={Luke Melas-Kyriazi and Christian Rupprecht and Andrea Vedaldi}

year={2023}

booktitle={Arxiv}

}Acknowledgements

L.M.K. is supported by the Rhodes Trust. A.V. and C.R. are supported by ERC-UNION-CoG-101001212. C.R. is also supported by VisualAI EP/T028572/1.