A Benchmark for Learning to Translate a New Language from One Grammar Book

Abstract

Large language models (LLMs) can perform impressive feats with in-context learning or lightweight finetuning. It is natural to wonder how well these models adapt to genuinely new tasks, but how does one find tasks that are unseen in internet-scale training sets? We turn to a field that is explicitly motivated and bottlenecked by a scarcity of web data: low-resource languages. In this paper, we introduce MTOB (Machine Translation from One Book), a benchmark for learning to translate between English and Kalamang---a language with less than 200 speakers and therefore virtually no presence on the web---using several hundred pages of field linguistics reference materials. This task framing is novel in that it asks a model to learn a language from a single human-readable book of grammar explanations, rather than a large mined corpus of in-domain data, more akin to L2 learning than L1 acquisition. We demonstrate that baselines using current LLMs are promising but fall short of human performance, achieving 44.7 chrF on Kalamang to English translation and 45.8 chrF on English to Kalamang translation, compared to 51.6 and 57.0 chrF by a human who learned Kalamang from the same reference materials. We hope that MTOB will help measure LLM capabilities along a new dimension, and that the methods developed to solve it could help expand access to language technology for underserved communities by leveraging qualitatively different kinds of data than traditional machine translation.

Results

Machine translation performance (chrF scores) for kgv-to-eng (left) and eng-to-kgv (right) translation across experimental settings.See Section 4.1.1 for for details on the models in the legend; -if represents finetuning on the grammar book text. See Section 4.1.2 for details on the provided context; ``-'' represents no context, W represents word list entries, S sentence pairs, G$^s$ grammar book excerpts, G$^m$ ~50K grammar book tokens, G$^l$ ~100K grammar book tokens, and + combinations thereof. We see that quality depends both on the underlying model and the provided reference materials, with the best results coming from Claude 2 in the W + S + G$^l$ setting. Human performance considerably exceeds all model baselines.

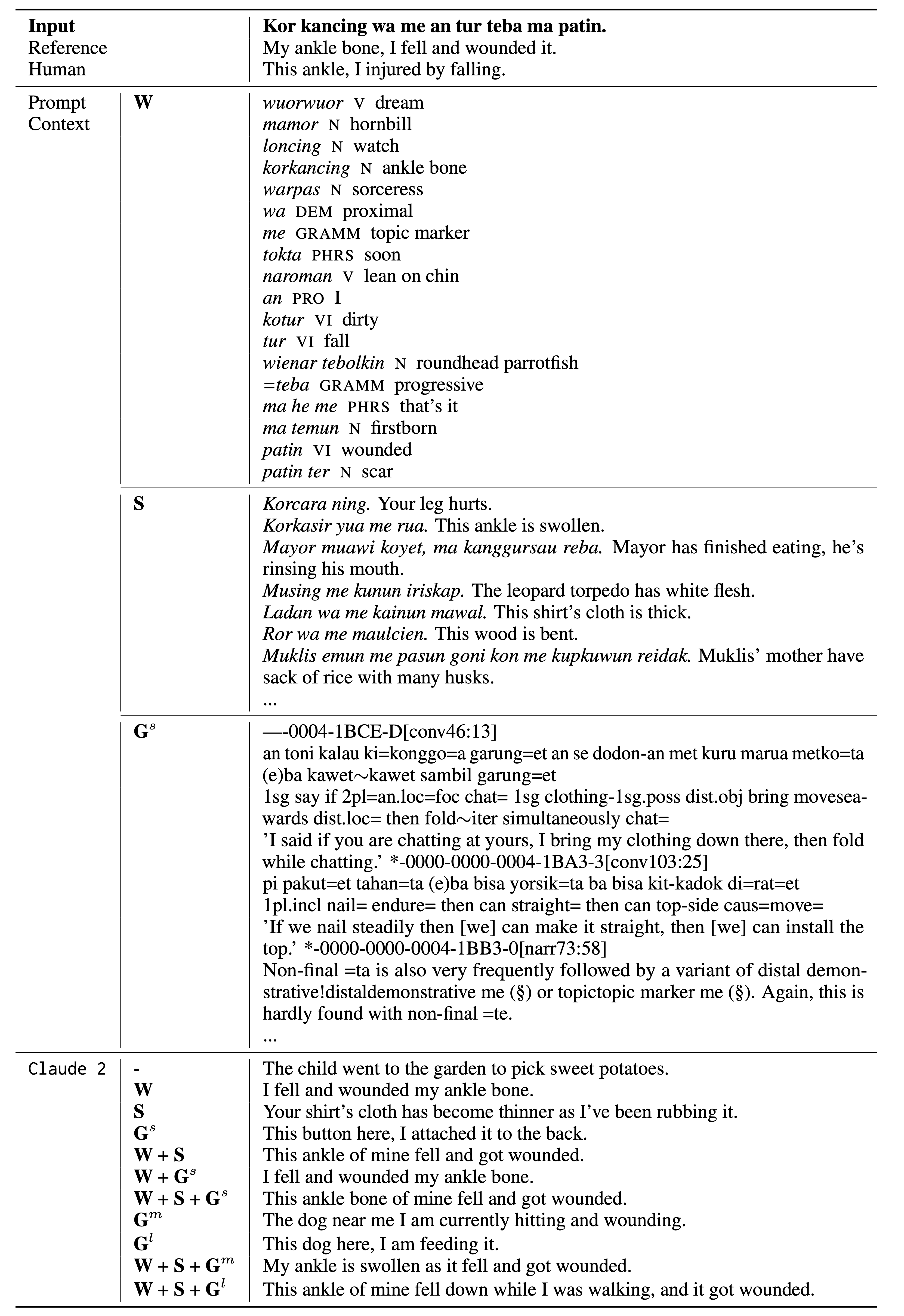

Qualitative Examples

Qualitative examples of kgv-to-eng translation with Claude 2 across different types of retrieved context.We omit some of the provided context to save space. In this particular case, the retrieved words were largely sufficient to guess the translation, and extra grammatical information led to more faithful but also more awkward outputs.

Citation

@inproceedings{

tanzer2023mtob,

title={A Benchmark for Learning to Translate a New Language from One Grammar Book}

author={Garrett Tanzer and Mirac Suzgun and Eline Visser and Dan Jurafsky and Luke Melas-Kyriazi}

year={2023}

booktitle={Arxiv}

}Acknowledgements

L.M.K. is supported by the Rhodes Trust.